What an Academic's Downfall Tells Us About Food Science

>

A self-described “former bad open-mic comic,” marketing professor Brian Wansink was endowed with an expressive smile and an above-average grasp of irony. This came in handy during lectures—Wansink is best known as the director of the Food and Brand Lab at Cornell University—and when he gave TED Talks with titles like “From Mindless Eating to Mindlessly Eating Well.”

During the same time that his research won him a directorship at the Center for Nutrition Policy and Promotion (a branch of the U.S. Department of Agriculture), Wansink wrote books that seemed uniquely well suited to Americans’ unceasing demand for practical advice on how to eat. Over the years, he presented himself as a “pracademic,” whose empirically rigorous work was still easy to apply in the real world. He became a frequent guest on morning talk shows, in large part because his ideas about dieting were consistently simple, coherent, and cheerfully explained.

But last month, Wansink was forced to resign from his position at Cornell amid charges of academic misconduct. Many researchers now refer to him as a con artist and have begun poring over every article he’s ever published, looking for signs of malfeasance. Wansink, for his part, denies any deliberate wrongdoing and says he will cooperate with a university investigation of the Food and Brand Lab. The story is all but guaranteed to make the public less trusting of the findings of behavioral scientists, including those who write about food. While most academics now agree that Wansink’s transgressions are fairly common and should have been easy to spot, there’s little consensus on how a disaster like this could be prevented in the future.

In November 2016, Wansink wrote a blog post that described a mentee who was having a hard time producing publishable material. Though the post was meant to motivate and inspire, commenters were put off by his suggestion that grad students engage in “deep data dives” when looking back on experiments whose results were inconclusive. Many believed that, in the interest of making research sound more impressive, he was flatly endorsing a form of “p-hacking”—a derisive term for statistical massages that make a hypothesis easier to prove. (Wansink claimed he’d been misread, and the post was eventually taken down.)

“It was so bland and straightforward,” one reader commented. “It seems like, in his effort to do some kind of public engagement, he accidentally outed himself as having not the slightest understanding of the research process.”

A year and a half later, a stunning BuzzFeed report revealed e-mail exchanges in which Wansink encouraged others to reframe data sets in such a way as to make the final product “go virally [sic] big time.” The article quoted colleagues at his lab who were concerned that they were sacrificing their academic integrity in the effort to write articles about nutrition that would generate headlines in the mainstream press. It appeared as if Wansink’s priorities at the Food and Brand Lab were being actively shaped by what could be shared on social media.

Meanwhile, Jordan Anaya, an academic based in Baltimore, Maryland, began subjecting Wansink’s portfolio to a few homemade tests, which were designed to detect improbable data. He was alarmed by what he found: papers about portion sizes at a pizzeria buffet, or overeating from a cinema concession stand, included mean averages that made no sense. There were also multiple instances of data duplication and self-plagiarism, including one in which nearly identical papers appeared in two separate publications.

“What was surprising was the combination of extreme sloppiness and fraud-like behavior,” says Tim van der Zee, a Ph.D. student at Leiden Universityin the Netherlands who went on to build an exhaustive dossier of Wansink’s papers that gave him and his colleagues pause. “That, and the sheer extent of it. It was very weird and unexpected to find so many errors.”

Along with Nick Brown, a graduate student at the University of Groningen, also in the Netherlands,and James Heathers, a postdoctoral fellow at Northeastern University, Van der Zee and Anaya worked on the dossier entirely in their spare time. The list now contains 52 Wansink articles, and as of this writing, 14 of them—including the six published in the prestigious Journal of the American Medical Association—have been retracted. Several more have been subject to corrections or formal expressions of concern.

Given Wansink’s public profile, many colleagues have wondered how he could have shepherded so many flawed articles into publication over so many years. Some have pointed to the difficulties of getting errors corrected or retracted, which can be overwhelming.

In Wansink’s case, e-mailed requests for original data took months to produce any kind of result. A lab that produces shoddy articles will still have multiple opportunities to drag its feet, and even when the signs of data-cooking are clear, most academic publications have an incentive to keep their corrections and retractions to a minimum, as they tend to reflect badly on the journal. Moreover, it can be hard to pin someone for it without seeming selective.

“P-hacking is actually a really serious problem, but there’s never been a single paper retracted for it,” says Anaya. “If we didn't have the media attention from BuzzFeed, Cornell probably wouldn’t have looked into this work.”

Others have pointed to flaws in the practice of peer review, which could have been swayed by Wansink’s celebrity status. “Wansink had (and I assume still has) remarkably original and entertaining ideas about food behavior and his papers posed questions that nobody else was asking,” Marion Nestle, a professor emerita of nutrition, food studies, and public health at New York University, wrote in an e-mail. “On that basis, and because not all peer reviewers pay attention to statistics (or could evaluate the data even if they did pay attention), they gave him a pass. Editors did too, for the same reasons, and because the results were so intriguing they were sure to get press attention.”

Given the amount of self-motivation it took to uncover Wansink’s missteps, some have called for major reforms in the entire process of academic publication. In Nature, the cancer researcher Keith Baggerly suggested that institutions like the U.S. Department of Health and Human Services dedicate one or two percent of their funding to grants that paid well-meaning outsiders to investigate papers in which they’d found something amiss.

This would give a greater incentive to people like James Heathers—who described a “vacuum” of support for his sleuthing—to follow up on research that didn’t look right. Ultimately, it would help ensure fewer research dollars were misspent on faulty theories that affect how people take care of their health.

Nick Brown, the graduate student who collaborated on Van der Zee’s dossier of Wansink, generally agrees. “Sometimes it feels like you’re doing meaningless nitpicking,” he said. “But then you find lots of articles that are full of elementary errors. People clearly don’t spend enough time reading and critiquing. It’s embarrassing. This is science. We’re meant to get this kind of stuff right.”

The Best Backpacking Sleeping Bags

You May Also Like

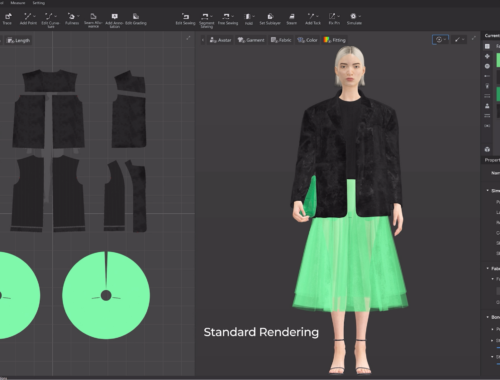

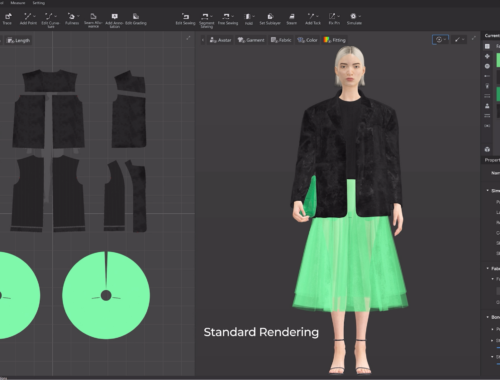

AI Meets Couture: How Artificial Intelligence is Redefining the Future of Fashion

February 28, 2025

The Future of Fashion: How Artificial Intelligence is Revolutionizing the Industry

February 28, 2025