As A Software Tester, I Question The Safety Of Self-Driving Cars

We now live in a world where everything other than a straw broom seems to be run by software. Lights turn on and off by themselves, security cameras send video of private household activities to public websites, cars steer themselves into oncoming traffic and, as we have seen from the two recent tragedies of Boeing 737 Max 8 jetliners in Indonesia and Ethiopia, sensors likely caused planes to nosedive and crash, killing more than 300 people.

We’re facing a perfect storm of autonomous technology, and we’re not ready.

When it comes to software flaws, it is easy to blame the engineers. But in my experience as an international software testing expert, we must look to the decisions made up the management chain to understand how and why bad software gets out the door in the first place.

Due to the nature and complexity of software, you can’t test to perfect. You test to good enough. And “good enough” for software that runs your Christmas tree lights is a much lower bar than “good enough” for the airline industry. Companies like Boeing do an exceptional job of testing, but they can still make strategic errors that put us all at risk. Why? Because complex software is never, ever perfect. In the drive to make a profit, shortcuts can win the day.

The more software, the more complexity, and the greater the need to focus on quality before speed to market. Because companies are always pushing hard to get new products out the door, I’ve experienced situations where executives have unwittingly moved the burden of the testing to their customers.

Given the nature of the airline industry, the worldwide attention on one small piece of the plane’s technology, and the way that the airlines work together to keep us safe, I have every confidence that Boeing will take the right corrective actions. I’m far less confident about how well we’re going to do in the self-driving car industry, however. The automotive industry operates on a completely different paradigm. They do not collectively and publicly cooperate on safety matters the way the airline industry has done from the outset.

When the airline industry was in its infancy back in the early part of the 20th century, long before airplanes had a single line of software code, competing airlines banded together to share safety knowledge and knowhow. A single airplane crash was bad for the entire industry, and airliners banded together to inspire confidence by forcefully addressing design and safety flaws, continuously and publicly improving the likelihood that passengers would arrive at their destinations safely.

Regulatory authorities such as the U.S. Federal Aviation Authority and the French Civil Aviation Authority secured effective governance mandates and shared safety knowledge across continents. A standardized, robust black box was installed in each airplane so that the regulatory experts could hear the voice of the pilot as they tried to recover from any technical issues encountered. Standards were established and revised based on learnings from the ever-decreasing numbers of tragedies.

Precious little of that is happening in the car industry. For one, there are no mandatory software safety standards and each so-called “black box” in today’s cars is riddled with proprietary software that can only be read by the specific auto manufacturer that made the black box. Because car companies keep all of their software secret, it can take well over a decade to publicly pinpoint deadly engine software failure points. Planeloads of people can die because of car software, but they die in onesies and twosies — and typically the driver gets blamed for the crash, seldom the engineers (and certainly not management).

If Boeing can’t get this right, why do we think that car companies will get it right all by themselves without robust regulatory oversight and enforced safety standards? After all, a Boeing 787 Dreamliner contains somewhere around 10 million lines of software code. A car or truck on the road today can have more than 100 million lines of code.

When building complex software products, companies will “test now or test later.” Executives are the final safety gate in any major product launch, and if they don’t make the right call, they are simply asking their customers to “test later” in the real world.

Today’s cars (not just the cars of tomorrow) are already computers on wheels. I’ve been a software tester all my professional life, but I’m not volunteering to be a crash-test dummy for the automotive industry. It’s time to focus on meaningful car software safety monitoring techniques and regulations.

And we need to work together to get this right.

Patricia Herdman is the CEO and founder of GlitchTrax, and the author of “When Cars Decide to Kill.” She is a software quality and testing expert, consulting to some of the world’s most technologically complex companies for more than 20 years.

Have you been affected personally by this or another issue? Share your story on HuffPost Canada blogs. We feature the best of Canadian opinion and perspectives. Find out how to contribute here.

You May Also Like

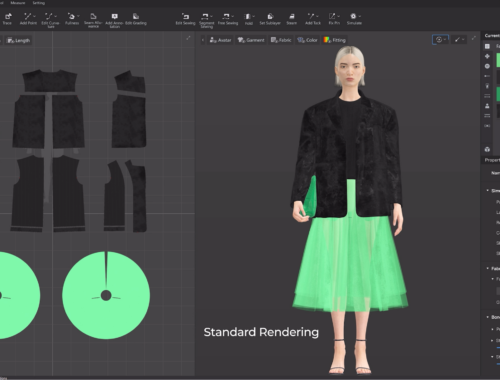

The Future of Fashion: How Artificial Intelligence is Revolutionizing the Industry

February 28, 2025

トレーラーハウスで叶える自由なライフスタイル

March 17, 2025